Already now robots are present in many areas, taking over tasks that are too dangerous, too repetitive, or require too high precision for humans. So far, however, robots used in practice are preprogrammed. Since our world is complex and constantly changing, preprogrammed robots are limited to well-controlled situations. In mammals, innate information, which corresponds to the preprogrammed part in robots, is complemented substantially by learning and self-organization. Motivated by this, our research vision is to create autonomously learning and developing robotic agents to design versatile assistants for humans.

To fulfill this vision, we have to tackle an extensive range of open challenges. We believe that improvements at the fundamental level in learning theory and learning methods have the potential to solve many of our challenges. Paired with the increasing availability of data in the robotic domain, learning methods are becoming competitive with purely engineered solutions and will surpass them for many applications.

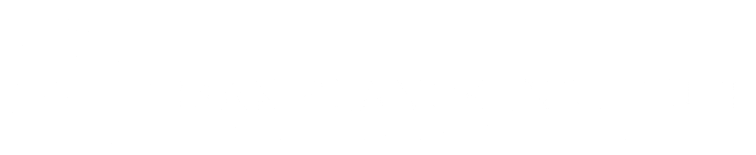

Taking inspiration from self-organization in nature that is evidently involved in building large and fault-tolerant systems from local interactions, our research investigates the theoretical basis for self-organized robot control [ ] using dynamical systems theory and information theory. Recent improvements allow for efficient self-exploration of the sensorimotor capabilities [ ] and the extraction and recombination of behavioral primitives [ ].

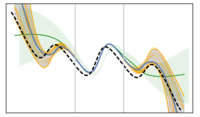

To make robots able to quickly learn new skills, they have to extract as much information as possible from experience. Learning a model of its body and the environment can capture the information needed to master unknown future tasks. We investigate model learning [ ] and suitable optimization and planning methods [ ] and their integration in reinforcement learning algorithms [ ]. We consider this as a promising route to make reinforcement learning so data-efficient that it can be applied to real systems easily.

For autonomously learning agents, it is also important to set their own goals for improving their skills, such that a designer does not need to specify all details of the learning curriculum. In [ ] we propose a framework and a practical implementation of an intrinsically motivated hierarchical learner that attempts to gain control of its environment as fast as possible. Another important insight is that the knowledge of the causal influence of a robot on its environment can greatly accelerate learning. Our first contribution in this direction proposes a tractable quantity to autonomously identify the situation-dependent causal influence [ ]. When learning from raw camera input in an unsupervised way, suitable representations have to be learned. In this context we study object-centric representations and suitable unsupervised reinforcement learning methods [ ]. Because unsupervised representation learning is generally very important for autonomously learning agents, we investigate commonly used algorithms from a theoretical perspective [ ].

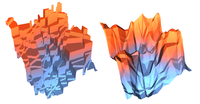

A parallel strand of our research is on enhancing the capabilities of machine learning models for model learning and reasoning. We develop deep learning-based symbolic regression methods, i.e. algorithms that attempt to identify the underlying relationships in data via concise symbolic or algebraic expressions [ ]. These methods are not only useful for autonomous agents but also to able solve problems in the natural sciences. We investigate several exciting topics in physics with our collaborators [ ] and are looking for further application domains.

To enhance the reasoning capabilities of deep networks, we have been the first to proposed a general and efficient method to embed combinatorial solvers as building blocks into neural networks [ ]. This new method has enabled us to improve the state of the art in several computer vision problems [ ] and generalization in reinforcement learning [ ].

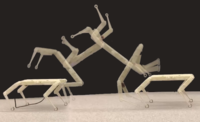

To enable successful learning, the robotic hardware needs improvement as well, especially by enriching the robot's perception of the surrounding world. Here we contribute by designing new haptic sensors that are powered by machine learning [ ].

Reinforcement Learning and Control

In several research projects, we investigate data-driven approaches to the control of robotic systems. By combining optimization and reinforcement learning, intrinsic motivation, and hierarchical planning, we are tackling various challenges arising in robotic systems. Please browse through the research topics in the m... Read More

Deep Learning

Deep learning is the tool for our research to obtain learned representations, fit functions such as policies or value functions and learn internal models. Along the way of using deep learning techniques for our core focus of autonomous learning we frequently need to develop new methods. Quite often, we stumble upon unsolved or puzzl... Read More

Haptic Sensing

The rapid evolution of robotic technologies informs practical benefits in various physical application areas. In complex, changing, and especially human-involved scenarios, a robot must be well-equipped to perceive the interactions between its own body and other things. Due to the visual occlusion and the small scale of the deformat... Read More

ML for Science

In various collaborations, we use machine learning methods in other scientific Domains to advance our understanding and gain new insights. One of our own machine learning method, called the equation learner, is particularly suitable for applications in the sciences, as it attempts to find very concise analytical equations for d... Read More

Previous Research Projects

Research conducted by members of the group prior to joining the Max-Planck-Institute for Intelligent Systems is presented in this category. Read More