2024

2022

Richardson, B. A., Kuchenbecker, K. J., Martius, G.

A Sequential Group VAE for Robot Learning of Haptic Representations

pages: 1-11, Workshop paper (8 pages) presented at the CoRL Workshop on Aligning Robot Representations with Humans, Auckland, New Zealand, December 2022 (misc)

Andrussow, I., Sun, H., Kuchenbecker, K. J., Martius, G.

A Soft Vision-Based Tactile Sensor for Robotic Fingertip Manipulation

Workshop paper (1 page) presented at the IROS Workshop on Large-Scale Robotic Skin: Perception, Interaction and Control, Kyoto, Japan, October 2022 (misc)

2021

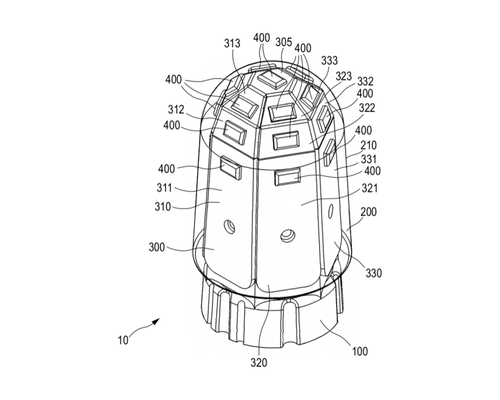

Sun, H., Martius, G., Kuchenbecker, K. J.

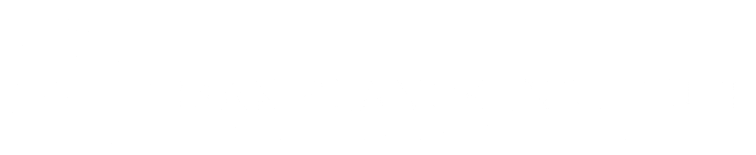

Sensor arrangement for sensing forces and methods for fabricating a sensor arrangement and parts thereof

(PCT/EP2021/050230), Max Planck Institute for Intelligent Systems, Max Planck Ring 4, January 2021 (patent)

Sun, H., Martius, G., Kuchenbecker, K. J.

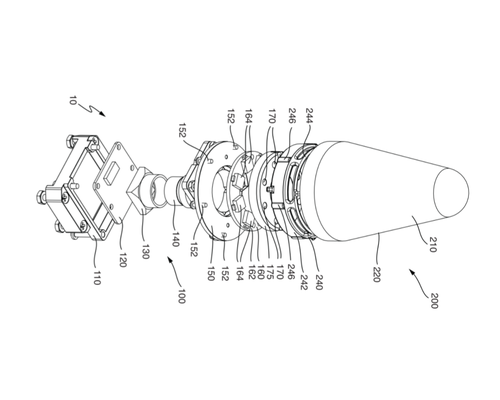

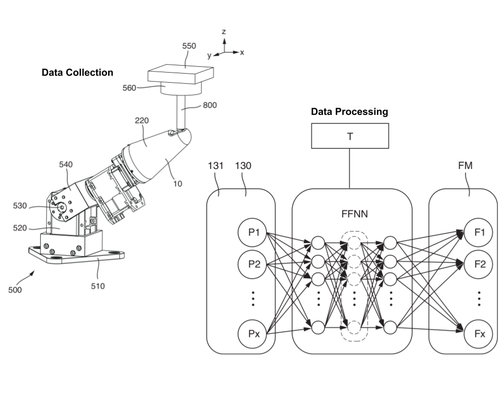

Method for force inference, method for training a feed-forward neural network, force inference module, and sensor arrangement

(PCT/EP2021/050231), Max Planck Institute for Intelligent Systems, Max Planck Ring 4, January 2021 (patent)

Werner, M., Junginger, A., Hennig, P., Martius, G.

Informed Equation Learning

arXiv, 2021 (misc)

2020

Sun, H., Martius, G., Lee, H., Spiers, A., Fiene, J.

Method for Force Inference of a Sensor Arrangement, Methods for Training Networks, Force Inference Module and Sensor Arrangement

(PCT/EP2020/083261), Max Planck Institute for Intelligent Systems, Max Planck Ring 4, November 2020 (patent)

Spiers, A., Sun, H., Lee, H., Martius, G., Fiene, J., Seo, W. H.

Sensor Arrangement for Sensing Forces and Method for Farbricating a Sensor Arrangement

(PCT/EP2020/083260), November 2020 (patent)

2016

Martius, G., Lampert, C. H.

Extrapolation and learning equations

2016, arXiv preprint 1610.02995 (misc)

2014

Martius, G., Der, R., Herrmann, J. M.

Robot Learning by Guided Self-Organization

In Guided Self-Organization: Inception, 9, pages: 223-260, Emergence, Complexity and Computation, Springer Berlin Heidelberg, 2014 (incollection)

2013

Der, R., Martius, G.

Behavior as broken symmetry in embodied self-organizing robots

In Advances in Artificial Life, ECAL 2013, pages: 601-608, MIT Press, 2013 (incollection)

2011

Martius, G., Herrmann, J. M.

Tipping the Scales: Guidance and Intrinsically Motivated Behavior

In Advances in Artificial Life, ECAL 2011, pages: 506-513, (Editors: Tom Lenaerts and Mario Giacobini and Hugues Bersini and Paul Bourgine and Marco Dorigo and René Doursat), MIT Press, 2011 (incollection)

2010

Martius, G., Hesse, F., Güttler, F., Der, R.

\textscLpzRobots: A free and powerful robot simulator

\urlhttp://robot.informatik.uni-leipzig.de/software, 2010 (misc)

Der, R., Martius, G.

Playful Machines: Tutorial

\urlhttp://robot.informatik.uni-leipzig.de/tutorial?lang=en, 2010 (misc)

Martius, G., Herrmann, J. M.

Taming the Beast: Guided Self-organization of Behavior in Autonomous Robots

In From Animals to Animats 11, 6226, pages: 50-61, LNCS, Springer, 2010 (incollection)